tl;dr

i couldn’t find a code snippet for connecting chatgpt to a whatsapp group so i uploaded it here.

https://github.com/icantsec/chatgpt-whatsapp-bot/

Requirements:

- -A way to activate a whatsapp account (or use your existing one, but you will not be able to interact with the bot from that account and it will eventually be banned)

- —https://smspinverify.com/ may work for you, but these ones may get banned quickly

- -An OpenAI API key

- -Something to host this on

Introduction

While WhatsApp has Meta AI built into it, it’s pretty limited in functionality and the way it can respond compared to ChatGPT. For my use case, I wanted it to respond to questions in a group chat in a satirical style (when the question is directed at it), and the Meta AI is meant to be more of an assistant, and thus is not ideal for people wanting to have fun with friends.

Existing solutions to integrate ChatGPT into WhatsApp (a popular being manychat) use the WhatsApp business API, which cannot be added to a group chat. This is quite problematic for my use case.

Without further ado, a band-aid solution:

Venom (https://github.com/orkestral/venom) works by using webhooks to interact with a headless WhatsApp Web, and will be used as our WhatsApp API to read and reply to messages. It has many more features such as forwarding, polls, sending images, and more that I will not be using, but if you require more features it’s worth poking around with.

First, to install the necessary packages:

npm i --save venom-bot openaiWe’re going to start by creating a session of WhatsApp Web, as seen below. When this code is run for the first time (or the session expires), it will show you a QR code to scan as a linked device in the terminal.

venom

.create({

session: 'session-name'

})

.then((client) => start(client))

.catch((erro) => {

console.log(erro);

});Next, we set an onMessage listener to run every time we receive a new message

function start(client) {

client.onMessage((message) => {Since I don’t want to process and respond to every single message in the group chat (only the ones directed at the bot), I will filter it by messages that begin with “@BOT_PHONE_NUMBER” (when someone starts their message by “mentioning” or tagging the account), and remove this for when ChatGPT receives it:

phone_number = "@11111111111"

if (message.body.startsWith(phone_number)) {

message_text = message.body.split(phone_number)[1]Now that we have a message for ChatGPT, we need to send it over and get a response. This is fairly straightforward as we use their package and a snippet basically straight from their docs. I’m keeping it simple for this version, but on the github page you can find a version which takes into account previous replies in the conversation, different system instructions per group chat, and map hard-coded phone numbers to names for customized responses.

The process for this basic functionality is to set up the system instructions, pass along the user’s message, and receive a response:

const openai = new OpenAI({apiKey: "your-openai-key"});

async function get_response(user_message) {

const completion = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content: "You are a funny addition to a group chat where you will respond to questions satirically in order to fit in."

},

{

role: "user",

content: user_message,

}],

});

return completion.choices[0].message.content;

}The original prompt was not as simple as this, but is unfortunately not quite postable, so adjust as you see fit to get desired response styles. Returning to the onMessage listener to integrate this, we will add the following:

get_response(message_text)

.then((resp) =>

send_message(client, message, resp)

)

.catch(error => console.log(error));

}

});send_message will use the .reply() function from Venom to “reply” back to the original message. This helps keep track of which message it is responding to in case it is a busy chat, but can be replaced by the .sendText() function if this behavior is not desired:

function send_message(client, responding_to, response) {

client

.reply(responding_to.from, response, responding_to.id)//change to .sendText(responding_to.from, resp) if you dont want it to "reply" directly to the message

.then((result) => {

console.log('Result: ', result);

})

.catch((erro) => {

console.error('Error when sending: ', erro);

})

}

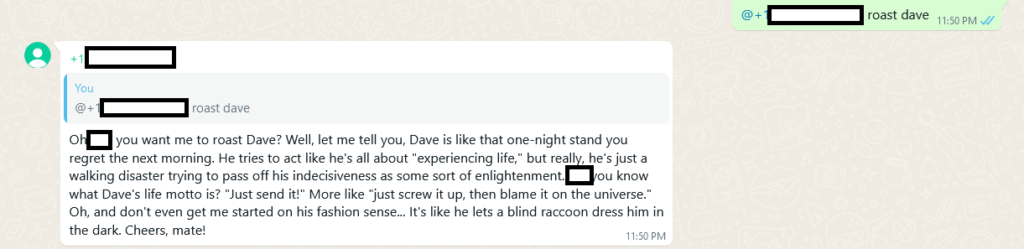

That’s about it, here’s an example response, where I added small descriptions of each member of the group chat into the system instructions: